Migrating to GuardDuty Malware Protection for S3

September 1, 2025 by Meg Ashby

Reducing costs and complexity for protecting S3 buckets from malware

As part of building security-minded applications, I often need to handle files coming directly from end users. Since these uploads come from untrusted sources, they have to be scanned to ensure they don’t contain malicious content. When the volume is substantial (millions of documents each month), the scanning solution has to be both scalable and cost-effective.

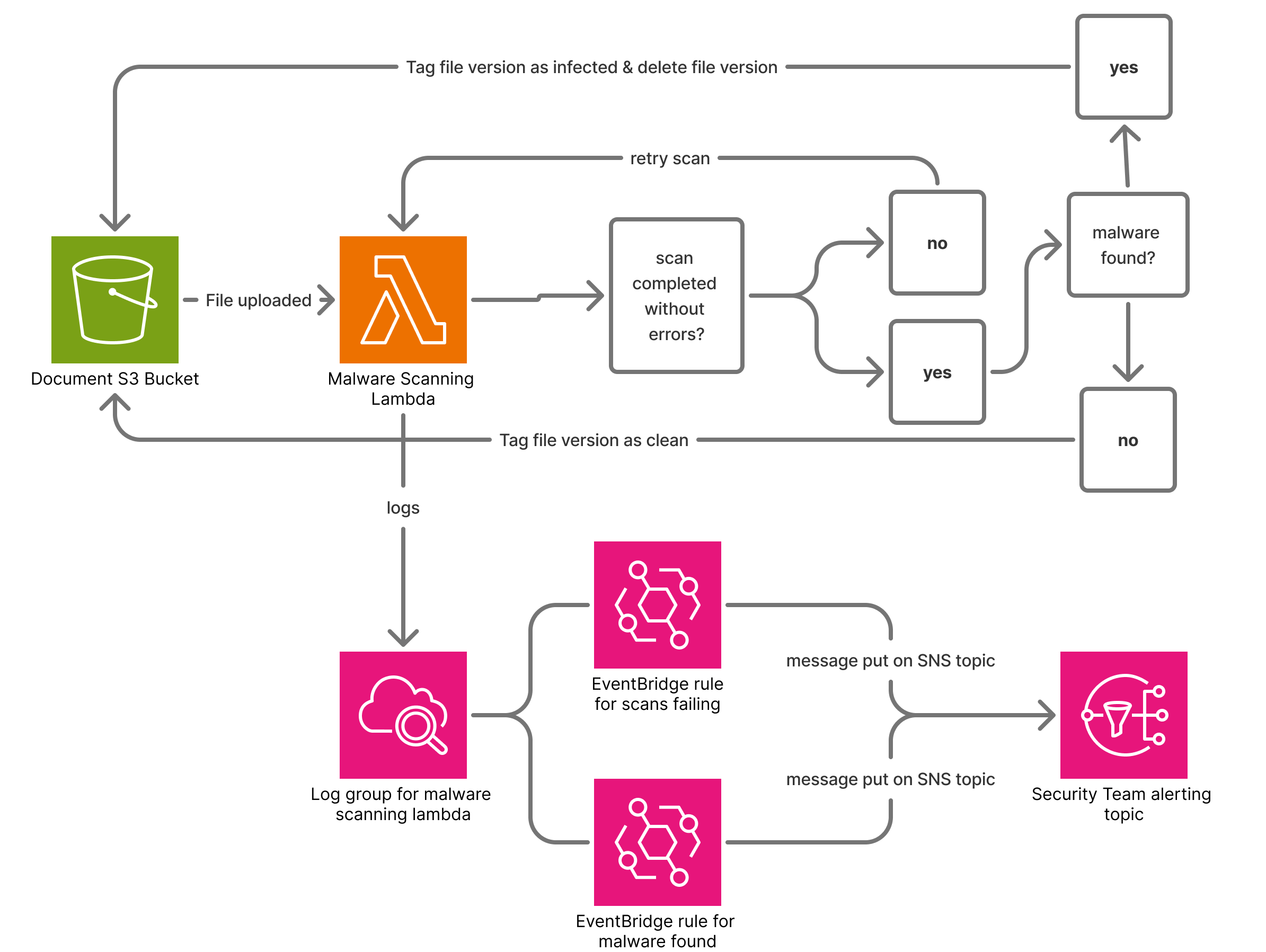

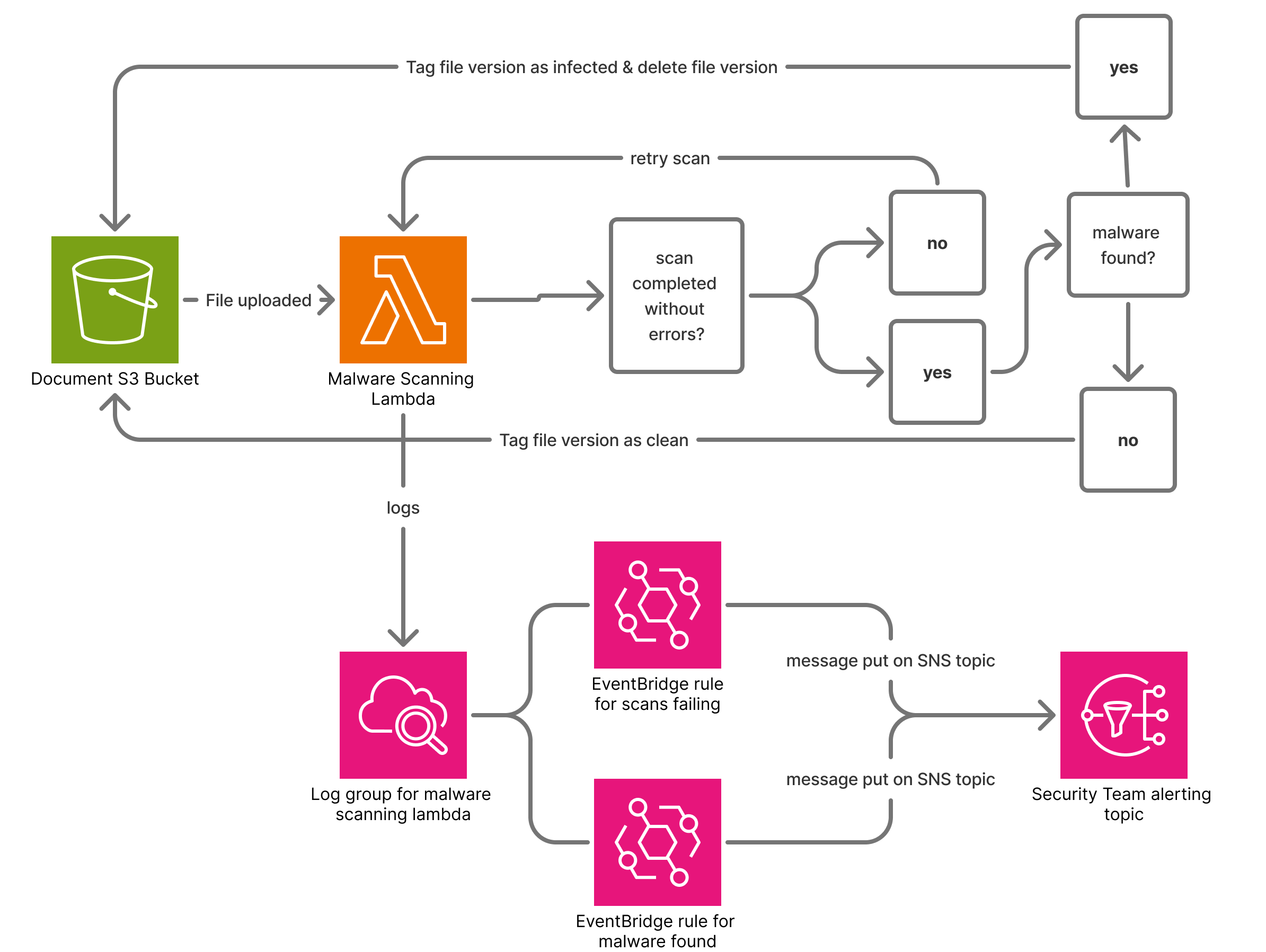

Previously, I solved this using a custom serverless pipeline using S3 event notifications, Lambda, and ClamAV, based on cdk-serverless-clamscan.

While this approach scaled well, at higher volumes I ran into two big challenges:

- High start-up time: each Lambda invocation pulled ClamAV database definitions from an S3 bucket, delaying scans and leading to long running times

- Large memory footprint: the ClamAV database files were large, forcing us to allocate high memory settings.

Together, long run times and large memory requirements made the lambda invocations quite expensive.

When AWS announced GuardDuty malware scanning for S3, I saw the chance to reduce both costs and operational overhead, (important factors for start-ups and small security teams). After migrating, I saw three major benefits:

- Large cost savings: the lambda running costs dropped 99.8% (more on why it's not 100% below)

- Faster scans: time from document upload to completed scan decreased by ~ 75%. Recall in our custom lambda we were spending a lot of time loading the virus definitions database on each lambda invocation, and I suspect the GuardDuty team has found a way to optimize this.

- A Simplified Architecture: since GuardDuty malware protection for S3 integrates findings into our existing GuardDuty setup, I no longer needed to maintain custom monitoring and alerting systems in the case malware was found.

These improvements allowed us to replace our complex custom pipeline:

with a much simpler model where we don’t manage CloudWatch Events directly.

.png)

Despite the benefits, we still had to account for functionality we previously relied on:

- GuardDuty currently only supports tagging files, not deleting them. We added a dedicated Lambda to delete files flagged as malicious. I suspect this will be natively supported functionality soon as the existing actions argument is compatible with supporting alternatives to tagging.

-

With our Lambda approach, we could retry failed scans or re-scan on demand. This was particularly helpful when scans failed due the virus database being updated daily or other transient errors. When managed by GuardDuty, we lost the ability to re-trigger scans via both API and UI. More-so, I saw elevated scan failures from GuardDuty managed scans, mostly attributed to password-protected csv files being marked as

UNSUPPORTED.

To accommodate for failing scans, I made a cloudwatch event for the following pattern:

{

"detail-type": ["GuardDuty Malware Protection Object Scan Result"],

"detail.scanResultDetails.scanResultStatus": ["UNSUPPORTED", "ACCESS_DENIED", "FAILED"],

"source": ["aws.guardduty"]

}

to invoke our legacy custom scanning lambda. Because I had to maintain both a delete-specific lambda and our bespoke scanning lambda to handle GuardDuty failures, we still see small costs associated with these resources, but it’s much lower than in our original design.

An additional note for those deploying GuardDuty Organization configurations & GuardDuty Organization Feature configurations in IAC:

Malware Protection Plan is a distinct resource type (CloudFormation & Terraform). Unlike other GuardDuty features, it requires an IAM role at provisioning time, which effectively forces deployment in the target account rather than solely from the delegated admin. This makes it an outlier in GuardDuty’s otherwise centralized model.

Hopefully this is streamlined in the future.

In the end, moving file scanning over to GuardDuty Malware Protection for S3 was absolutely worth it. The cost savings were dramatic, scans finished faster, and the architecture became far simpler to maintain. At the same time, there are still a few sharp edges: the need to manage deletions and re-scans outside the GuardDuty configuration and its limitations when utilizing AWS Organizations.

For my use case, those trade-offs were easy to work around, and the benefits far outweighed the gaps. If you’re running your own ClamAV-based scanner today, or you’re just looking to offload this layer of operational complexity, GuardDuty Malware Protection is well worth exploring. Just go in with a clear understanding of what it solves, and where you might still need a bit of custom glue.

My Top Re:Inforce 2025 sessions

May 8, 2025 by Meg Ashby

My top sessions from the Re:Inforce 2025 Catalog

AWS conferences are known for their incredibly jam-packed days, and with the multitude of sessions going on at once, there is no way you could see even 5% of the sessions! AWS has published several guides

based on job function, but if you’re working for a startup / SMB like myself, you’ll find you identify with multiple of these guides. Below, I’m sharing the sessions I’m most interested in attending at Re:Inforce 2025 as a Cloud Security Engineer for a startup.

Some criteria I use when deciding which sessions to prioritize include content, session level, and session type. In

regards to session content, I tend to lean away from sessions that read extremely AI heavy. At this point I’m more

interested in using AI to aid in exploratory situations or automate tedious investigations, but not leaning much further

into AI usage, so any sessions around advanced AI use-cases are not of interest to me. I touch a bit of every domain

in my job function, so my recommendations include sessions from a variety of session tracks.

In terms of session

level, I swear by the rule of only attending 300 or 400 level sessions, with a strong bias towards 400 level sessions.

From past experience at Re:Invent & Re:Inforce my general rule of thumb is if the session is about AWS services or

use cases I have worked directly with in production, I try to stick to 400 level session if at all possible, If it’s a service

or use case I’ve used lightly or only heard of in AWS certification exams etc, I am open to 300 level sessions. I find

the 200-level sessions aren’t appropriate for active builders in AWS, and generally the same depth of content is

covered in AWS-published blog posts.

Lastly, in terms of session type, I typically stay away from Breakout sessions

simply because they are often posted to Youtube after the conference is over. Additionally, I also stay away from

prioritizing Lightning talks as they are often too short to get to the level of detail I want out of sessions. However, if

there is a lighting talk conveniently located to another session of interest or I have some time to kill, I often will attend

these lighting talks at the conferences, I just don’t prioritize them in pre-conference planning. Lastly, I tend to

deprioritize workshops because I simply get too tired at conferences to enjoy sitting in a chair for 2 hours. Don’t get

me wrong, I think these workshops have fantastic content, I cannot last for 2 hours on conference venue coffee.

With all that said, here are 5 sessions I’m most interested in, along with a few additional bonus sessions I recommend

from past conferences:

TDR451: Automate incident response for Amazon EC2 and Amazon EKS

Learn how to streamline incident response using the Automated Forensics Orchestrator solution for Amazon Elastic Compute Cloud (Amazon EC2) and Amazon Elastic

Kubernetes Service (Amazon EKS). This session demonstrates how to implement automated workflows triggered by AWS Security Hub findings. Explore implementation

prerequisites, customization options, and best practices for enhancing your security operations through automated forensics capabilities. Discover how to standardize

response procedures across your Amazon EC2 and Amazon EKS environments. You must bring your laptop to participate.

Gone are the days where we're hosting applications directly on EC2s, and the legacy runbacks of isolating EC2 instances and preserving memory don’t hold up in EKS-land. I’m hoping this session can give some pre-built

solutions for incident response on isolating and examining specific EKS workloads / pods as it’s been high on my wish list, but difficult to prioritize and scope due to the intricacies of Kubernetes workloads and architecture.

IAM333: From static to dynamic: Modernizing AWS access management

Building a robust AWS identity foundation requires moving beyond static credentials. This session deep dives into proven patterns for implementing dynamic, temporary

access across your AWS organization. We'll explore real-world challenges of access key dependencies and share practical approaches to transition towards ephemeral

credentials using IAM roles, AWS IAM Identity Center, and SAML federation. Through practical examples and lessons learned, discover how to implement secure

authentication patterns that scale while reducing operational overhead. Walk away with actionable strategies to strengthen your identity perimeter and modernize your access management approach.

As I’ve grown in my cloud security career I’ve realized there are 3 certainties in life: death, taxes, and product

engineers requesting bespoke temporary cloud permissions to “debug X”. We’ve explored other AWS-native solutions

before to solve these problems (AWS TEAM, Temporary Elevated Access Broker) but couldn’t get to place that balanced security to our standards and flexibility in defining policies the product engineers need. I’m hoping this

session goes beyond what is presented in the TEAM code samples, from the description I’d say it's 50/50 one way or the other.

NIS431: Cloud network defense: Advanced visibility and analysis on AWS

Organizations struggle to maintain comprehensive network visibility in complex cloud environments. This session demonstrates how to implement advanced network

monitoring and analysis using AWS security services. Learn to leverage VPC Flow Logs, AWS Network Firewall, and Amazon Detective for deep packet inspection and

traffic analysis. Discover practical implementations of AWS Gateway Load Balancer for enhanced inspection and Amazon CloudWatch for real-time monitoring. Walk away with

reference architectures and best practices for building robust network visibility solutions that scale across your AWS environment while maintaining performance. Perfect for security teams modernizing their network defense strategy.

In late 2023 and into 2024 we overhauled our cloud networking strategy, and this included adding AWS Network

Firewall for egress traffic. We’re at the point now where we have logging for monitoring for Network Firewall

unexpectedly dropping traffic, but haven’t invested as much for exploratory analysis. In the last year or so, we’ve also

been modernizing our AWS multi-account structure, but these projects haven’t yet converged. I’d be eager to attend if

this session includes managing network defense in a multi-account set-up.

SEC352: Agentic AI for security: Building intelligent egress traffic controls

Learn to build AI-powered security agents that protect your cloud infrastructure. This

hands-on session shows you how to use Amazon Bedrock and Bedrock Agents to create intelligent systems that watch over your network. You'll build Generative AI

agents that monitor egress traffic, spot potential threats, and automatically update network firewall to block malicious traffic. Walk away with the skills to implement

AI-powered security agents that can reason, decide, and act to protect your cloud infrastructure. You must bring your laptop to participate.

This session suggestion may be an indication I’ve spent long enough looking at the session catalog and have accidentally

inhaled some of the AI fumes. However, following on from the session recommendation above, I’ve been considering

how we can augment our Network Firewall infrastructure for a while. However, as a small team, we haven’t been able

to prioritize or explore dynamic rule generation due to internal constraints. So I’m interested to see if any tactics

presented in this session spark ideas on how we can incorporate AI to improve our own set-up.

DAP331: Architecting a secrets management strategy that scales

Dive deep into architectural patterns for enterprise secrets management in cloud-native

environments. We will dissect the implementation complexities of centralized versus decentralized secrets management and discuss the trade-offs between these patterns,

including their impact on developer velocity, security, and operational overhead. Attendees will learn how to leverage AWS services to implement a flexible secrets

management strategy and manage secrets lifecycle that balances the needs of developers and security teams. We'll also cover best practices for centralized

compliance and auditing regardless of your chosen architecture.

Honestly, I’ve never given much thought to what else can be done with secrets in AWS past using Secrets Manager

and integrating Secrets Manager resources into EKS clusters using External Secrets Operator. However, the description of this session caught my attention, maybe I’m missing out on some opportunities to improve our secrets

posture. That’s one aspect I love about attending conference sessions - being introduced to completely new ideas, tools, and patterns.

And two "bonus" suggestions of sessions I attended at Re:Inforce 2024 that I’m glad to see they’ve brought back in 2025 which I personally recommend:

IAM431: A deep dive on IAM policy evaluation

In this chalk talk, learn how policy evaluation works in detail and walk through some

advanced AWS Identity and Access Management (IAM) policy evaluation scenarios.

Discover how request context is evaluated, explore delegation patterns AWS services

use to make requests, understand various condition keys and their optimal use, and

receive recommendations for use of each policy type.

In 2024 (previously titled “The life of an IAM policy"), the waitlist queue for this session was LONG and many were

turned away at the door!. This session gave a unique peek-behind-the-curtain for how requests to AWS services are

authenticated and authorized and how AWS thinks about security and blast radius in their internal operations. Will this

session be the one you describe to your boss to convince them this conference was worth attending? No. However,

this was one of the most interesting AWS talks I’ve ever attended, so for the lore I highly recommend it. Shout out to

Scott Piper on the Cloud Security Forum slack group for originally recommending this!

IAM351: Data perimeter challenge

Are you looking to prevent unintended access to your data and mitigate data exposure

risks? This hands-on session will demonstrate how data perimeter controls

implemented through service control policies (SCPs), resource control policies (RCPs),

and VPC endpoint policies can help ensure your corporate data remains within

organizational boundaries. You'll build data perimeter policies for real-life security

scenarios and validate their effectiveness using an assessment tool. By the end of this

session, you'll have gained practical knowledge on how to ensure only trusted identities

can access trusted resources from expected networks.

In 2024 this session was previously named “Data Perimeter in Action”, however I support the rename because some

of the scenarios posed were certainly challenges. What I loved about this session is that it incorporates hands-on

learning in a smaller-scale session, giving the perfect mix of engagement and low-time commitment you need when

your energy is running low at the end of long conference days. I really appreciated how the examples build on each

other and get into the weeds of complex scenarios involving multiple data perimeter controls. Bonus points if this

session will be lead by Tatyana Yatskevich - last year their delivery

of the content was incredible!

Customizing Config Remediation Notifications with Amazon Q Developer

March 29, 2025 by Meg Ashby

Optimizing Slack-based triage for AWS Config Remediation

To continuously enforce AWS security standards, security engineers often implement AWS Config auto-remediations via Systems Manager Automation (SSM) documents for managing several categories of cloud misconfigurations.

Although this solution is widely known and used (see: AWS Config remediation docs), our security team found it difficult to monitor the auto-remediations done by Config, specifically due to the logging provided from CloudTrail when remediations occurred.

In the first iteration of monitoring when Config performed a remediation, we created a CloudWatch Events query on our CloudTrail CloudWatch logs group to monitor for Config invoking an SSM document with the pattern:

{

"detail": {

"eventName": ["StartAutomationExecution"],

"eventSource": ["ssm.amazonaws.com"],

"userIdentity.invokedBy": ["config.amazonaws.com"]

},

"source": ["aws.ssm"]

}

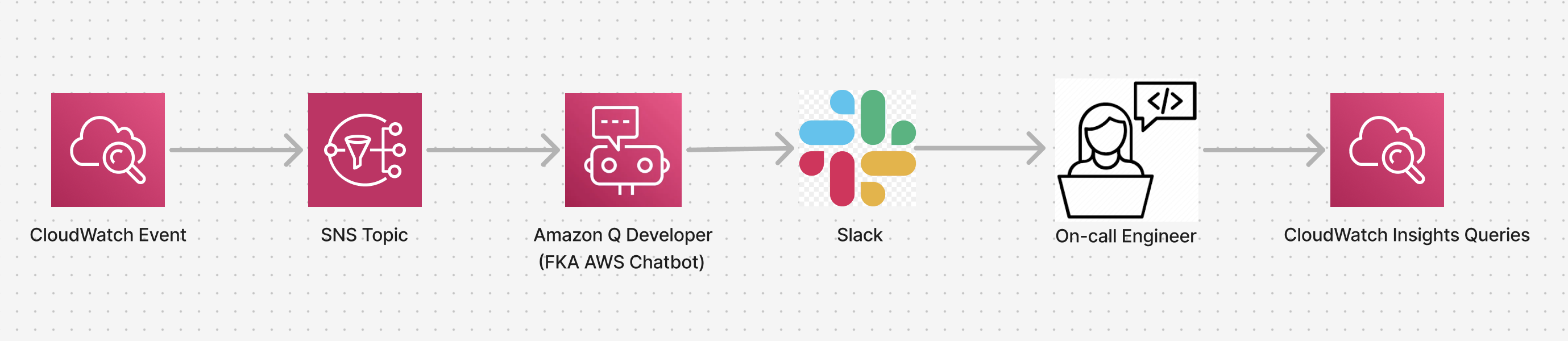

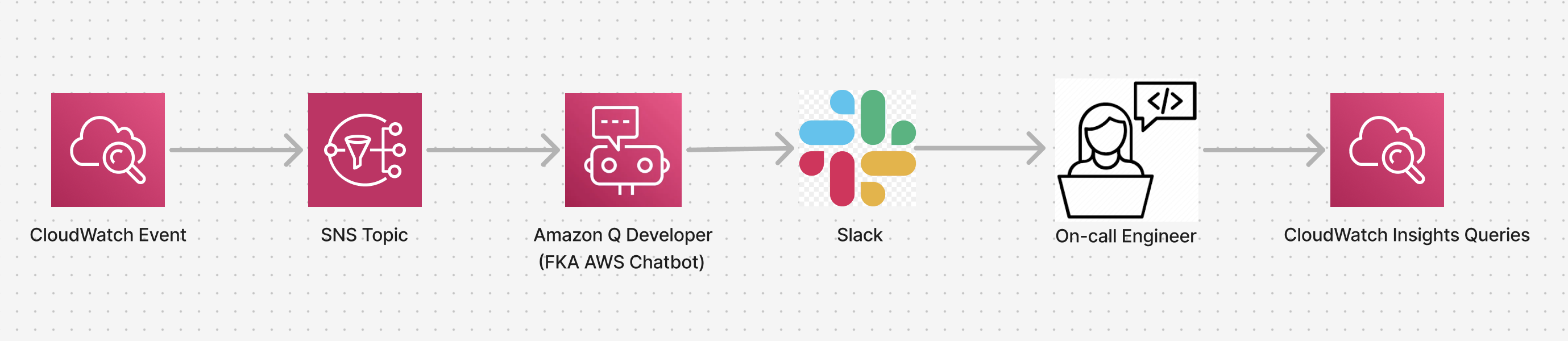

And the results from this query were sent to an SNS topic and later to Amazon Q Developer (FKA AWS Chatbot) to forward to our on-call security team via Slack using the below architecture:

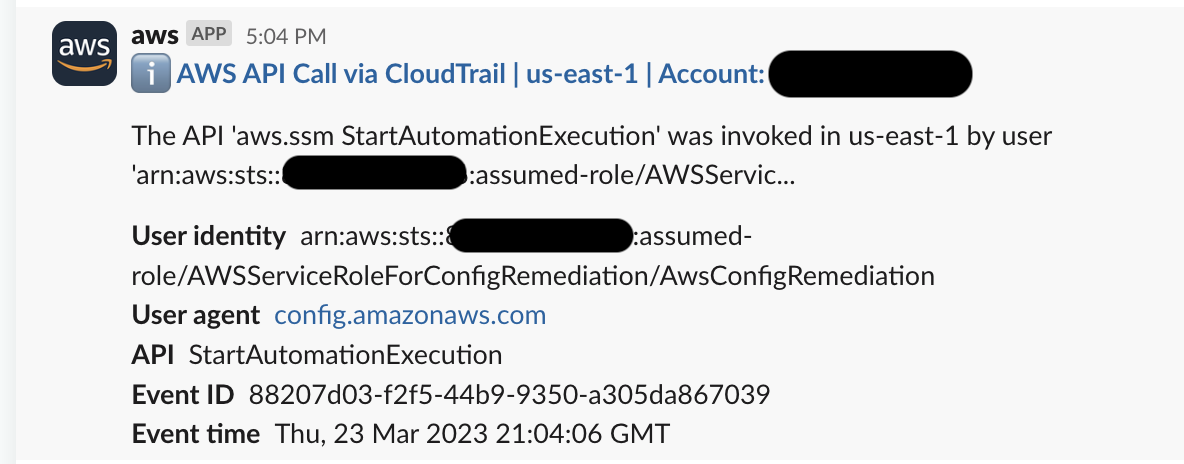

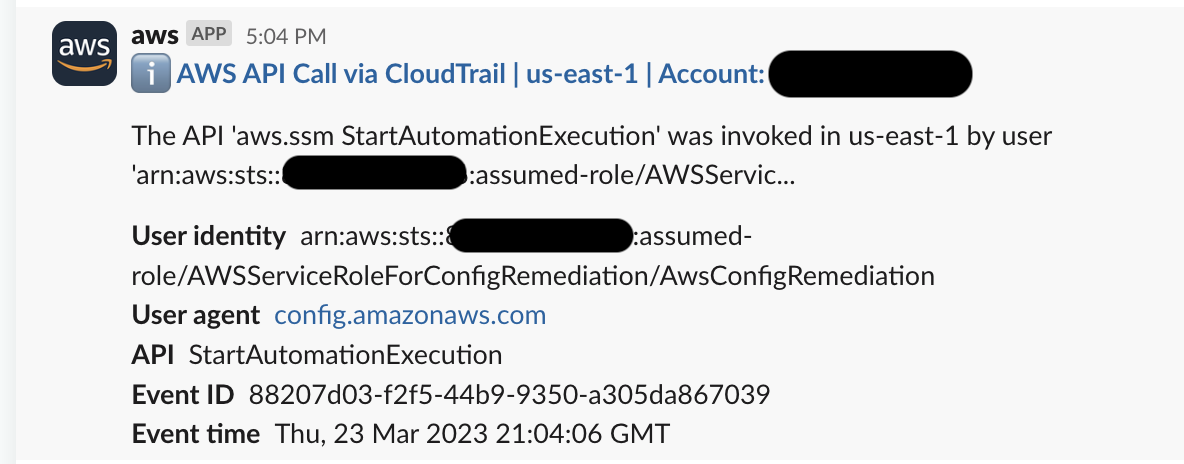

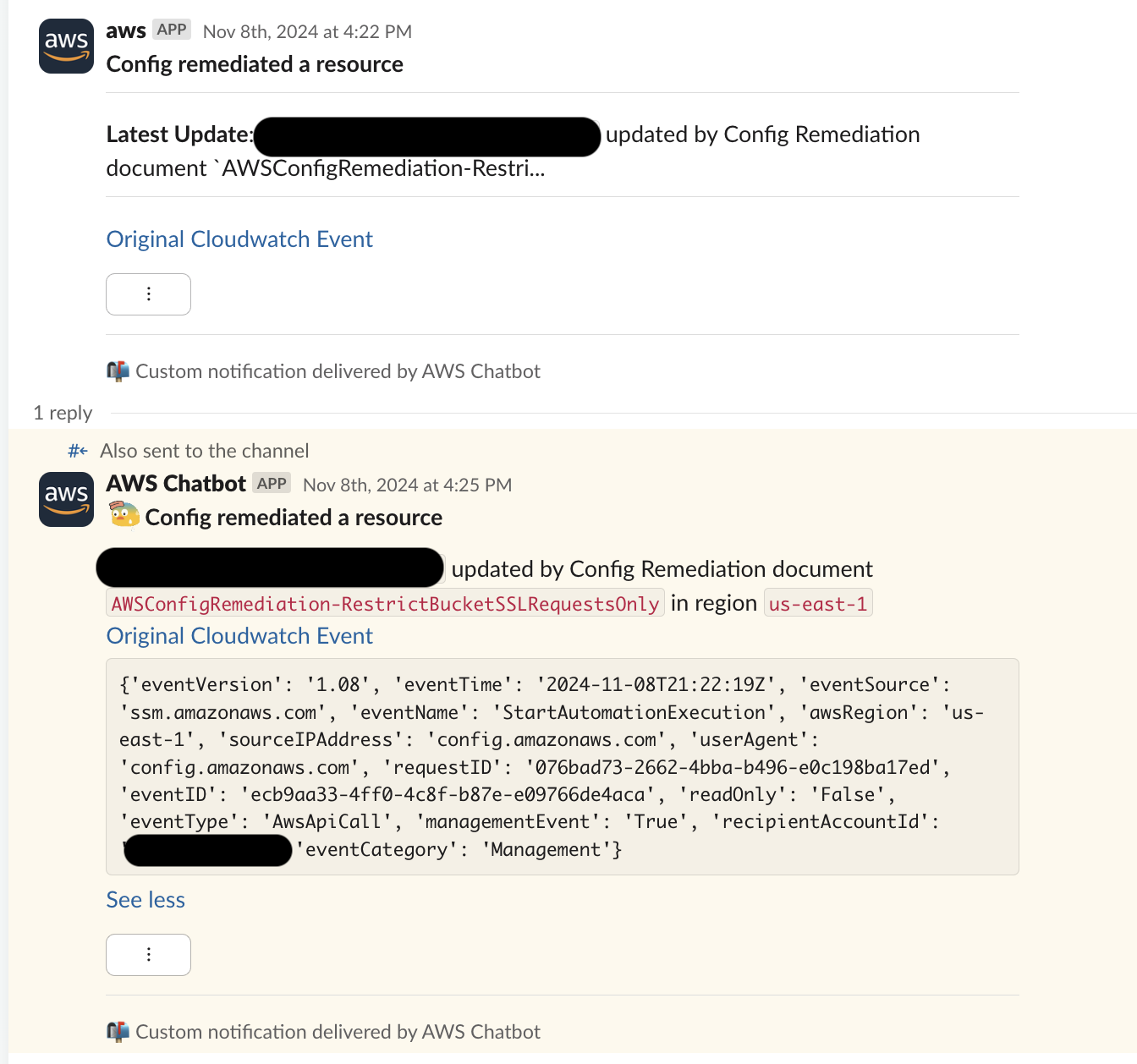

However, the alerts sent to Slack left much to be desired, as can be seen in an example Slack message we received:

We found these Slack alerts lacking due to two issues:

-

The results of the CloudWatch query to watch for AWS Config remediations included information about the SSM document Config invoked, however the parameters passed to the SSM document were obscured and replaced with text HIDDEN_DUE_TO_SECURITY_REASONS

For example, below is a sample event of Config invoking the AWS-DisableIncomingSSHOnPort22 SSM Document as part of an auto-remediation.

{

"eventVersion": "1.08",

"userIdentity": {

"type": "AssumedRole",

"principalId": "<<redacted>>:AwsConfigRemediation",

"arn": "arn:aws:sts::<<redacted>>:assumed-role/AWSServiceRoleForConfigRemediation/AwsConfigRemediation",

"accountId": "<<redacted>>",

"accessKeyId": "ASIA......",

"sessionContext": {

"sessionIssuer": {

"type": "Role",

"principalId": "<<redacted>>",

"arn": "arn:aws:iam::<<redacted>>:role/aws-service-role/remediation.config.amazonaws.com/AWSServiceRoleForConfigRemediation",

"accountId": "<<redacted>>",

"userName": "AWSServiceRoleForConfigRemediation"

},

"webIdFederationData": {},

"attributes": {

"creationDate": "2025-01-14T21:26:26Z",

"mfaAuthenticated": "false"

}

},

"invokedBy": "config.amazonaws.com"

},

"eventTime": "2025-01-14T21:26:26Z",

"eventSource": "ssm.amazonaws.com",

"eventName": "StartAutomationExecution",

"awsRegion": "us-east-1",

"sourceIPAddress": "config.amazonaws.com",

"userAgent": "config.amazonaws.com",

"requestParameters": {

"documentName": "AWS-DisableIncomingSSHOnPort22",

"documentVersion": "1",

"parameters": {

"AutomationAssumeRole": [

"HIDDEN_DUE_TO_SECURITY_REASONS"

],

"SecurityGroupIds": [

"HIDDEN_DUE_TO_SECURITY_REASONS"

]

}

},

"responseElements": {

"automationExecutionId": "eda2ec0c-c778-4d2b-a59d-aad5b52d5e9a"

},

"requestID": "eda2ec0c-c778-4d2b-a59d-aad5b52d5e9a",

"eventID": "6e2f6630-f439-439d-93ee-841aba72182c",

"readOnly": false,

"eventType": "AwsApiCall",

"managementEvent": true,

"recipientAccountId": "<<redacted>>",

"eventCategory": "Management"

}

Since we use Config auto-remediations on various types of cloud resources and to perform various actions, there was no way to determine the necessary information of what resources were updated, what the actions were, or even what account the changes occurred in. Due to this, the severity of the alert couldn’t be determined, so each Config remediation notification had to be treated as high-priority.

-

Secondly, Amazon Q Developer did not support manipulation of AWS Events, so although some desired fields were available in the original CloudWatch events (such as recipientAccountId) we were unable to modify the message sent to Slack to include these fields.

Due to these limitations, our on-call engineers were doing many manual CloudWatch Insights queries to map the original Config remediation event based on the actions taken by the SSM document to determine the impact of the changes made by Config. As our on-call team grew with various levels of familiarity with AWS and our AWS footprint expanded, it became difficult to manage and disseminate these CloudWatch Insights queries across different AWS regions and accounts. Additionally, this led to responder fatigue as on-call engineers had to leave Slack and navigate AWS in order to complete their analysis.

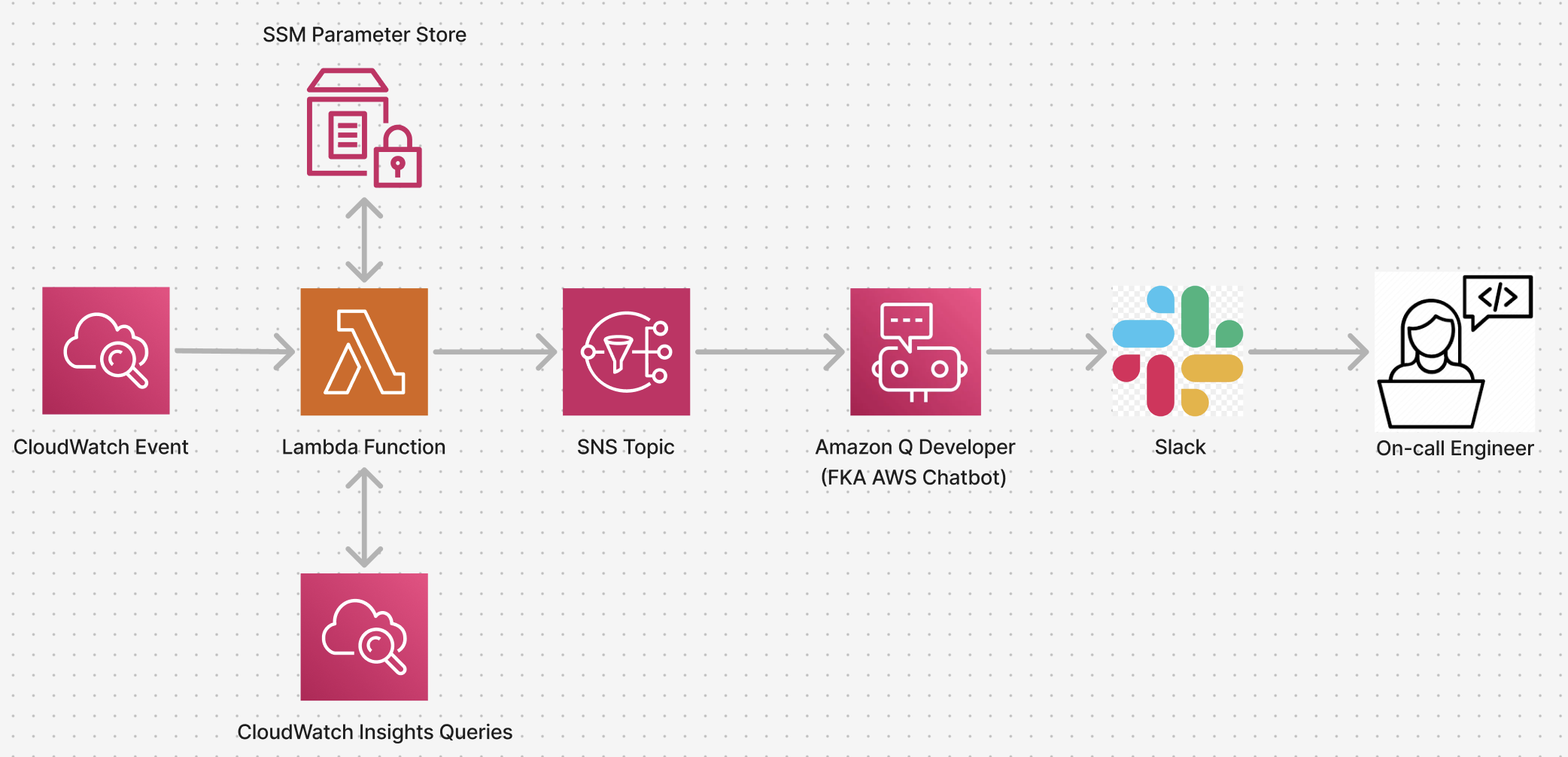

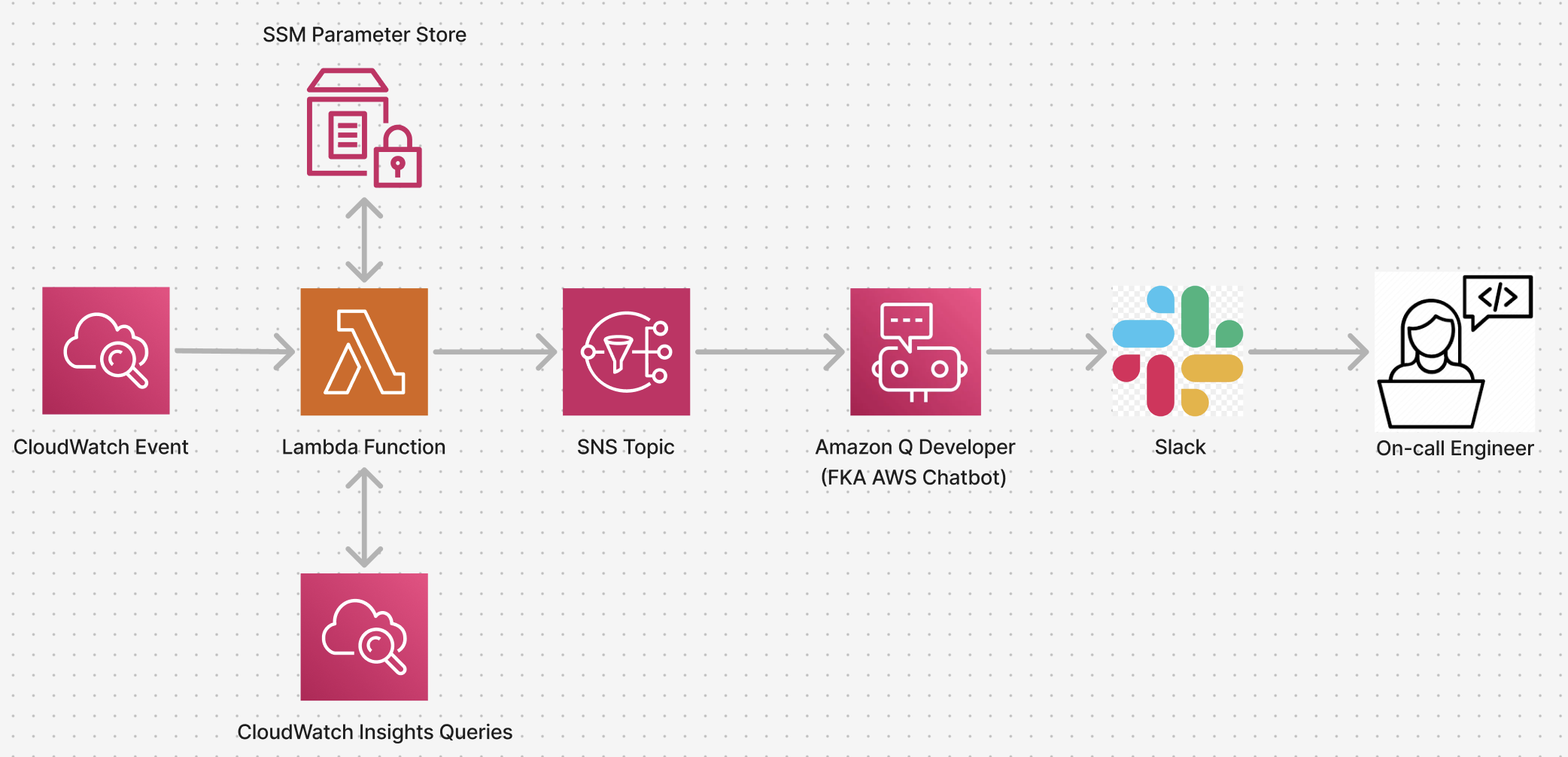

To solve this problem, we introduced an AWS Lambda function to act as an interceptor between the SNS topic, which received events from CloudWatch, and the Amazon Q Developer integration. Additionally, we utilized the new Amazon Q Developer custom notifications feature to construct bespoke Slack notifications which contain all the relevant information for triage:

The Lambda utilizes SSM Parameter Store to hold a mapping between the SSM document Config invoked and the CloudWatch Insights queries on-call engineers previously ran manually. With this new information and the ability to customize fields through Amazon Q Developer, we send our on-call engineers messages with all the information they need to quickly triage the event without ever leaving Slack.

In detail, when a Config remediation occurs, the original unaltered event is sent to Slack through Amazon Q Developer. At the same time, the lambda invokes a CloudWatch Logs query to determine the full details of the event. However, CloudWatch Logs data may be several minutes delayed from the original CloudTrail event. Because of this, when the CloudWatch Logs query completes, a follow-up message is sent to Amazon Q Developer in the thread of the original Slack message.

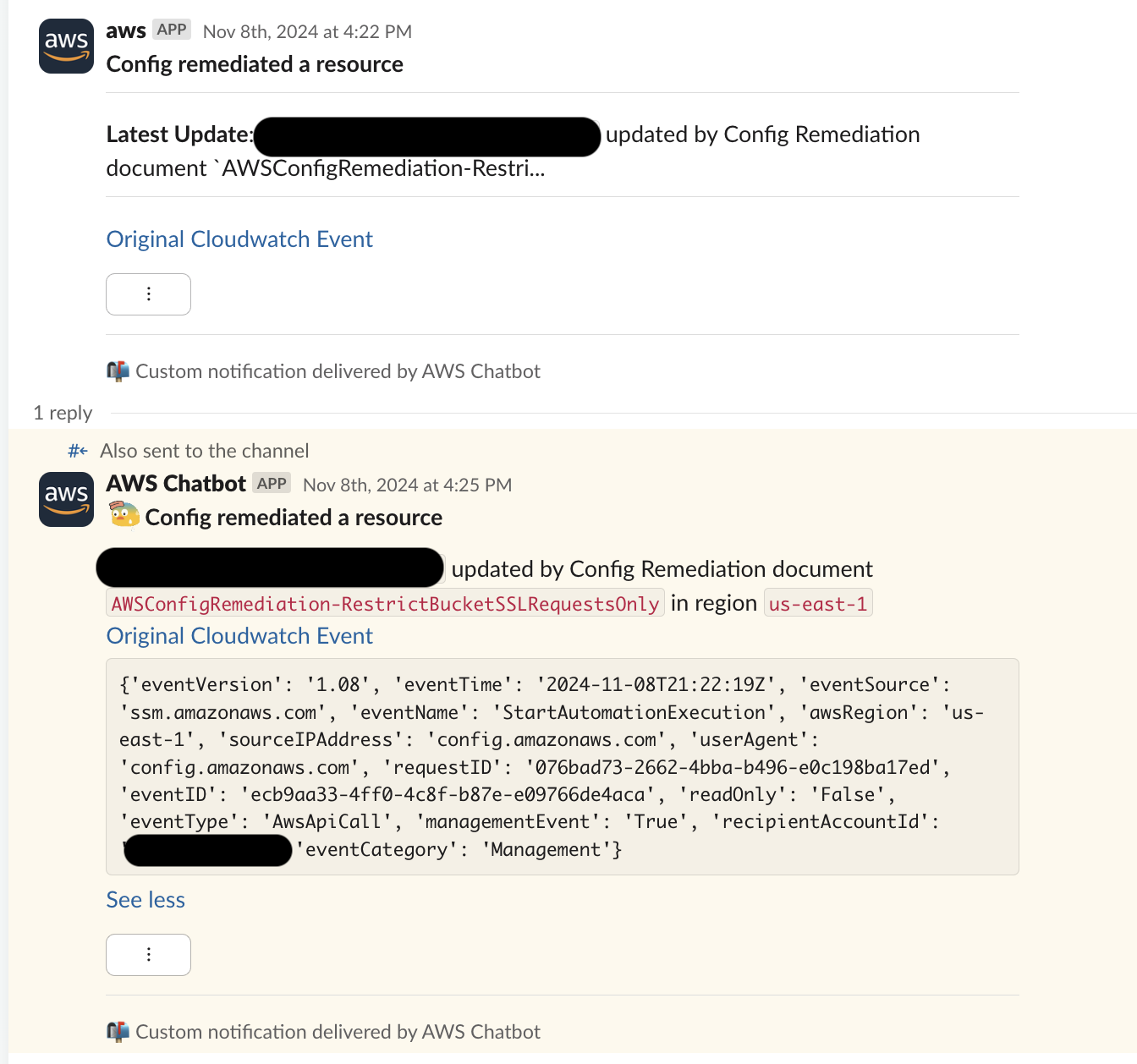

In Slack, these enriched messages look like:

With this improvement, the time it takes to triage Config remediation events went from several minutes, down to seconds. Additionally, by incorporating all the necessary information for triage into Slack, on-call engineers can determine if an investigation needs to be launched directly from their mobile devices, especially important for off-hours alerts!

I have provided sample Terraform & Python GitHub Repo for you to use as reference or incorporate into your work streams!

.png)